AI Workflow Platform

Built for Developers

Four Core Features

Boosting efficiency across the entire development and deployment pipeline

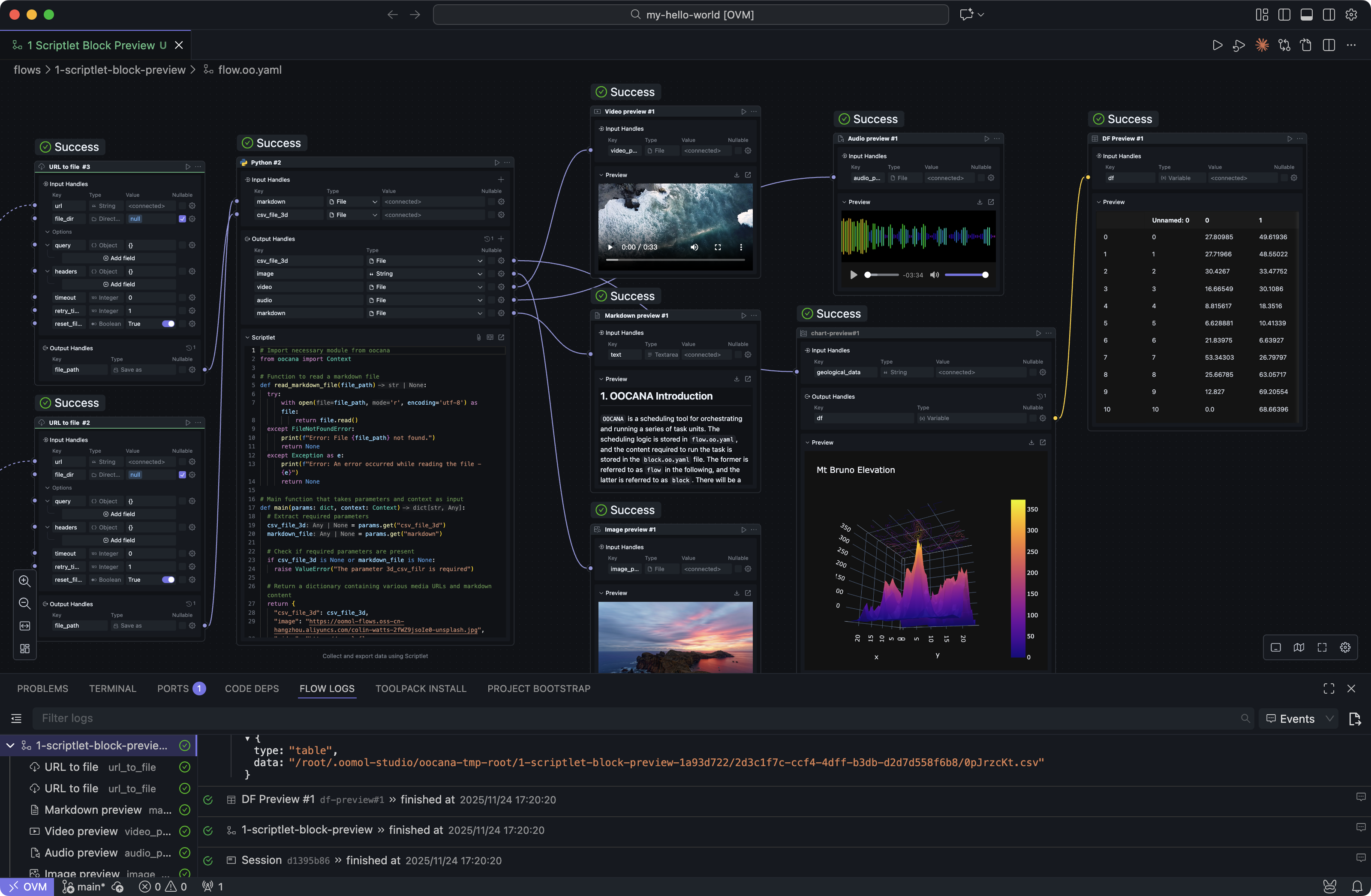

01|Node as Function: No New Framework to Learn

• Clear input/output definitions, external calls work like using an API

• Reference any open-source library, zero bottlenecks for feature expansion

• Native VSCode experience

• AI auto-generates nodes + Vibe Coding Block accelerates development

02|Local Containerized Development: Production-Ready Deployment

• No environment setup required, works out of the box

• Strong isolation, doesn't pollute host machine

• Local environment identical to cloud environment

03|Device as Server: Put Idle Computing Power to Work

• Personal computers & NAS become servers instantly

• GPU value no longer wasted (supports WSL2 calling Nvidia GPU)

• Private deployment + remote access anytime

04|One-Click Publishing: API / MCP / Automation

• Serverless API services

• MCP tools, directly integrated with AI

• Timer / Trigger automation processes

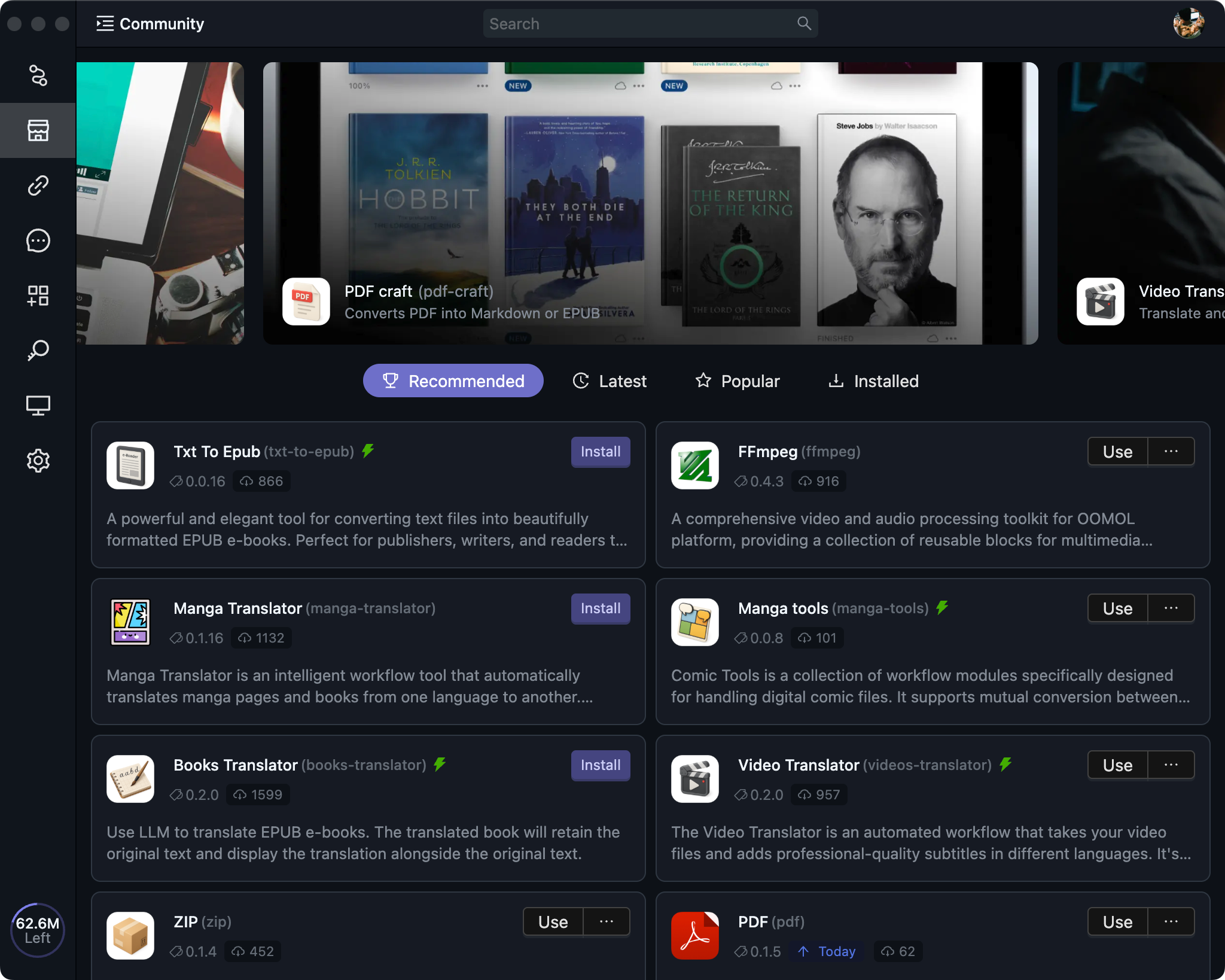

Publish and Share

Join our vibrant community to share your creations, discover amazing tools, and collaborate with fellow creators.

Choose Your Deployment Mode

From local development to enterprise deployment, OOMOL provides flexible product formats to meet different scenarios. 3 Modes, One Experience.

Studio

Headless

Cloud

Fusion APIs & LLM Access

Use a single OOMOL API Token to quickly access various common APIs and mainstream LLMs. Simpler, more unified development experience.

- Python

- TypeScript

from oocana import Context#region generated metaimport typingInputs = typing.Dict[str, typing.Any]Outputs = typing.Dict[str, typing.Any]#endregionasync def main(params: Inputs, context: Context) -> Outputs | None:# Fusion API Base URLapi_url = "https://fusion-api.oomol.com/v1/fal-nano-banana-edit/submit"# LLM Base URL, can use openai sdkllm_base_url = "https://llm.oomol.com/v1"# Get OOMOL token from context (no need for manual API key input)api_token = await context.oomol_token()return {# "output": "output_value"}

//#region generated metatype Inputs = {};type Outputs = {};//#endregionimport type { Context } from "@oomol/types/oocana";export default async function(params: Inputs,context: Context<Inputs, Outputs>): Promise<Partial<Outputs> | undefined | void> {// Fusion API Base URLconst api_url = "https://fusion-api.oomol.com/v1/fal-nano-banana-edit/submit"// LLM Base URL, can use openai sdkconst llm_base_url = "https://llm.oomol.com/v1"// Get OOMOL token from context (no need for manual API key input)const api_token = await context.getOomolToken()// return { output: "output_value" };};

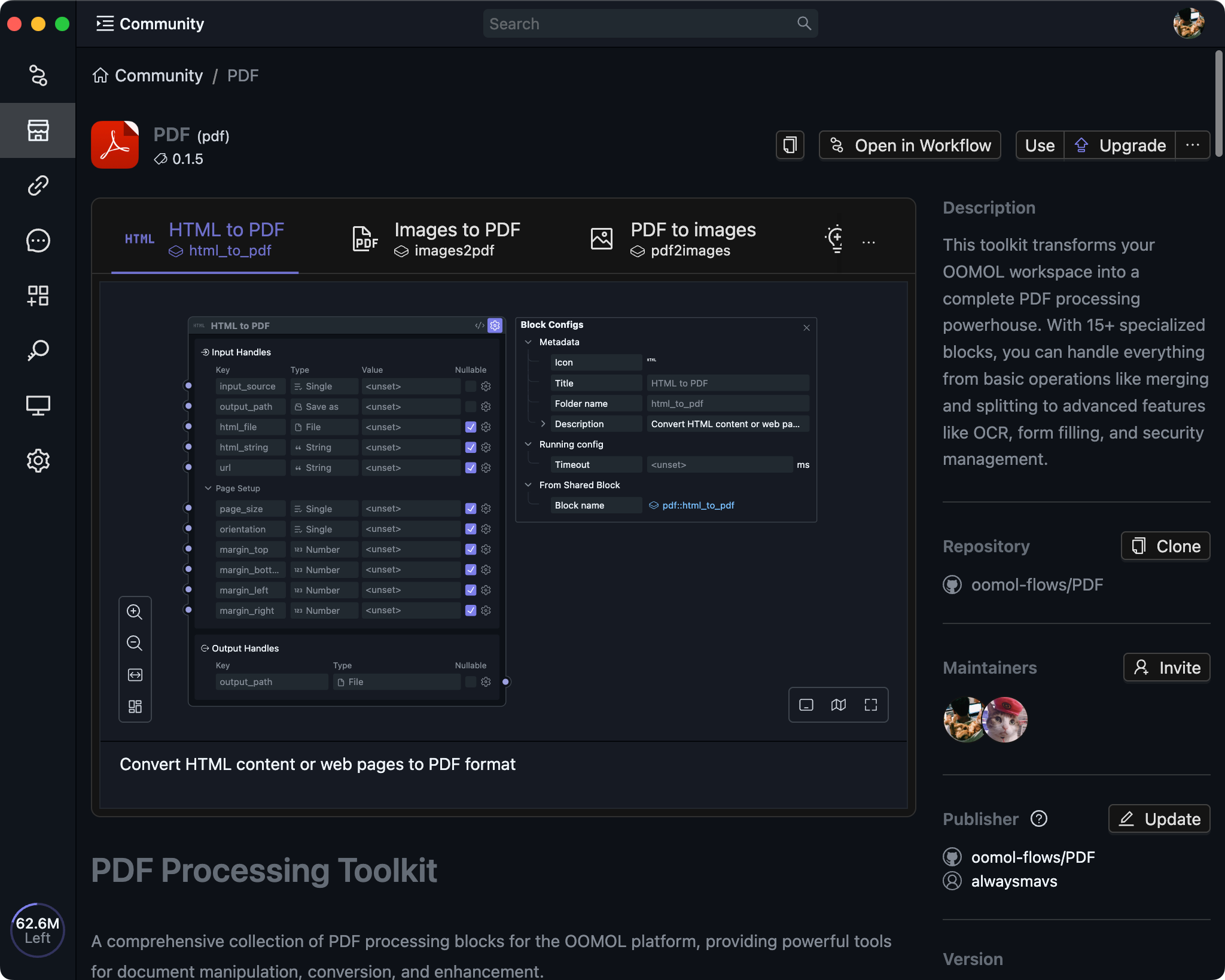

Open Source Project Built on OOMOL: PDF-Craft

Experience the power of local computing with our flagship open source project built on OOMOL

PDF-Craft

PDF-Craft is OOMOL's official open source PDF to eBook conversion tool with 3000+ GitHub stars. Run it locally for free, share with friends via OOMOL, or use the official API service — there's always a service that fits you.

Local Computing, Free & Private

- •Prepare a PC with RTX 3060 or higher GPU

- •Install OOMOL Studio for free local deployment

- •Use Mini App or Flow to run

Pay-as-you-go, Ready to Use

- ✓Official maintenance, quality guaranteed

- ✓Available on PDF-Craft website

- ✓API service for developers